TL;DR

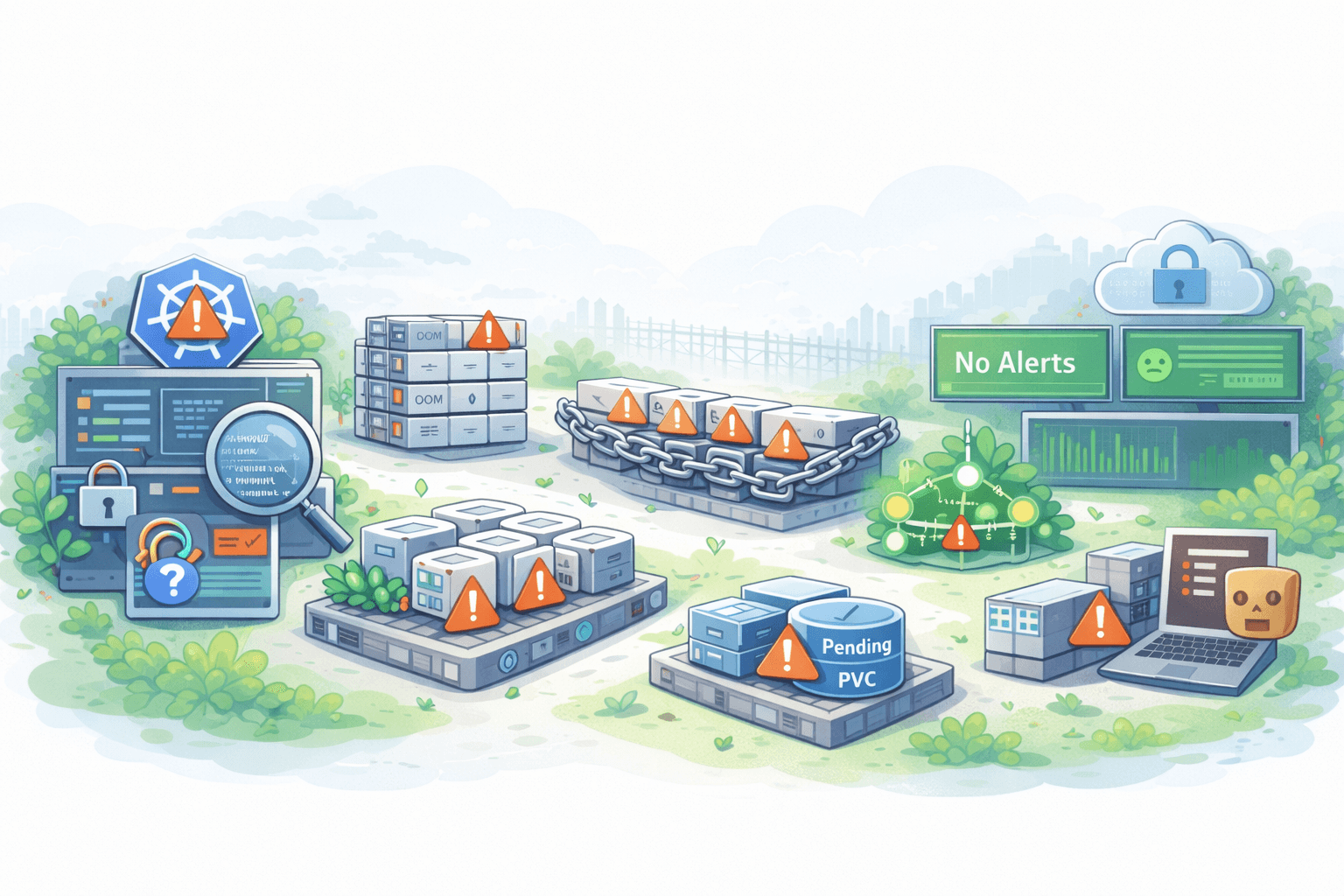

- Most outages start with small config gaps, not “bad Kubernetes.”

- RBAC mistakes can turn one compromised pod into a cluster-level incident.

- Missing requests and limits often leads to noisy neighbors, OOMKilled pods, and node pressure.

- Bad probes cause restart loops, broken rollouts, and “it works on my laptop” debugging.

- NetworkPolicy errors either block real traffic or allow far too much lateral movement.

- No alerts means you find incidents from customer tickets, not dashboards.

- Drift and YAML sprawl quietly break environments over time.

- Storage configs fail in the worst moment: deploy day, scale day, or node replacement day.

The primary cause of incidents results from incorrect system configurations which impact all Kubernetes organizations regardless of their team members’ Kubernetes expertise. The platform will follow your exact configuration so you can execute your code but it will still result in system downtime. Only the right partner at your side and the right checklist can be a remedy.

According to the 2025 Komodor Enterprise Report, 79% of Kubernetes production outages were linked to configuration and change issues, showing how often setup errors, not core platform flaws, trigger real downtime.

In this article, you will see 10 frequent outage triggers. One Kubernetes misconfiguration can knock out a service, break rollouts, or block traffic in ways that look like “random instability,” until you trace it back to configuration.

Each issue follows the same pattern: Symptom → Fix → Prevent. Use it as a checklist for reviews, incident postmortems, and pre-prod gates.